Background

The purpose of this research program is to evaluate the efficacy of detecting changes in roadside weather using only static images from commodity traffic cameras as input. If successful, these results will provide a cost-effective alternative to the state-of-the-art dedicated weather-sensing hardware currently deployed by Department of Transportation (DOT) agencies. In areas that may experience extreme weather, DOTs may deploy weather-sensing technology to enable alerting drivers when driving conditions become dangerous. However, due to limited budgets, this sensing technology is isolated to only the most traveled, most likely to experience extreme weather highways. This limited deployment is a public health concern that has already resulted in many avoidable collisions and deaths on highways across the nation. As a result, a cost-effective alternative that can be deployed using existing physical infrastructure would be of great value.

Approach

To address this goal of detecting changes in roadside weather conditions using only snapshots collected from traffic cameras, data from DOT agencies is actively being collected, including both traffic camera snapshots and dedicated weather sensor data where available. This raw data must first be coalesced into a common format across the wide variety of native formats, then labeled either by using the dedicated weather sensor data or by using human labeling where no sensor-based ground truth is available. To facilitate the manual labeling, a web-based tool has been developed which enables both snapshot labeling and high-level visualization of data trends. This tool may be later used to crowd-source labeling across SwRI if it is deemed a worthwhile approach.

Concurrent to data curation efforts, the project team is developing a convolutional neural network (CNN) that will be trained to recognize “good visibility” versus “bad visibility,” as well as to identify specific forms of precipitation, including “snow,” “rain,” or “dust storm.” The novel aspect of the approach is how CNNs are being applied to detect, not specific elements within an image, but rather considering an entire scene and identifying a characteristic of the scene.

Accomplishments

To date, the project team has interfaced with the disparate data sources available from four DOT agencies, coalescing that data into common formats and storing this data on a project server. The team has also, as mentioned above, developed a web-based tool to facilitate labeling and analyzing high-level trends in the collected data. This ability to view data trends has revealed erroneous sensor data and enabled us to “clean” the data being operated on by the CNN. Finally, initial CNN implementation used to detect “good visibility” versus “bad visibility” reached 84 percent accuracy. These initial tests were on a limited dataset and additional work is required to achieve specific weather condition detection, but these initial results are very promising.

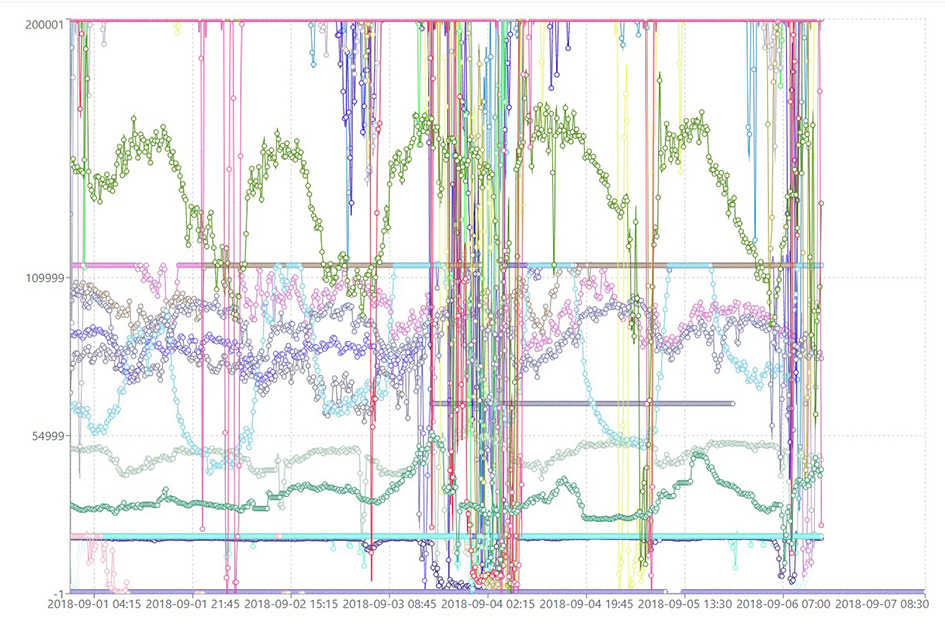

Figure 1: A high-level view of sensor data.