Background

Biomechanical analysis through 3D motion capture is a valuable tool for accurately quantifying human movement. The measurements gathered by these means can be used to improve performance, increase efficiency, and identify potential injury risks for sports athletes and tactical athletes. While most 3D motion capture systems use reflective markers and specialized cameras, markerless systems are being created. SwRI has developed a markerless system that does not require expert knowledge of anatomical landmarks to set up, requires far less time to set up and capture measurements, and offers comparable accuracy to marker-based systems.

Current 3D motion capture systems, including SwRI’s markerless motion capture system, are set up to capture video within a predefined space, or stationary capture volume. The cameras in the system are stationary and must be calibrated before they can be used for motion capture. To capture a larger area, more cameras must be added. This makes it impractical to analyze biomechanics over a larger area such as an obstacle course.

The resulting research from this project will extend SwRI’s existing markerless motion capture system to perform biomechanical assessments in a non-stationary capture volume. Successful completion of this research will result in a state-of-the-art technology that can be used in many applications within the field of human performance such as biomechanical assessment for tactical athlete training programs, Olympic athletes, and professional athletes.

Approach

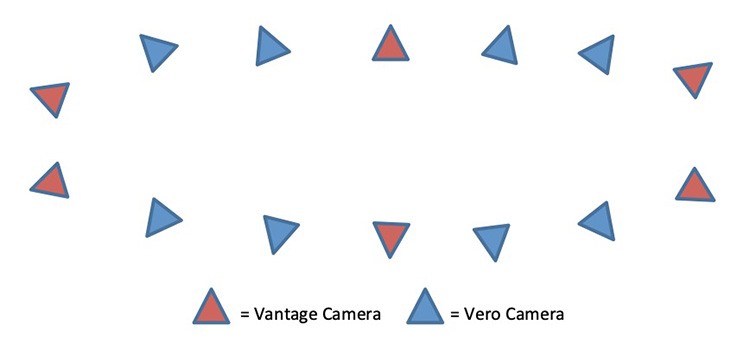

To support validation and tracking of the mobile cameras, a marker-based motion capture system will be leveraged. This system uses the following hardware:

- Six 5 megapixel infrared cameras

- Eight 2.2 megapixel infrared cameras

The same marker cluster model and labeling skeleton will be used as with previous internal research and external clients.

A second camera system consisting of two USB 3.0 machine vision cameras is used to follow the subject along a 48’ track. The cameras are attached to a tripod supported by the track system. The two camera systems both cover a roughly 48’ by 7’ area.

The research team will experiment with different techniques from a class of algorithms for monocular Simultaneous Localization And Mapping (SLAM), exploring a range of modifications, which will fuse the camera motion and subject motion problems to intelligently solve for both.

Accomplishments

The two camera systems have been installed. The Initial capture sessions began on a single subject. These captures are now being processed to generate the validation data on both the subject (joint centers and joint angles) and the mobile cameras. This data will be used to start the process of selecting and refining the computer vision algorithms that will solve for the mobile cameras’ motions through 3D space.

Figure 1: Camera position configuration

Figure 2: Example of the initial video and marker-based data.